My Take on Generative AI

I tried/have been trying an experiment lately where I used ChatGPT during my brainstorming process for a completely new project, just to see how well it would do. It's terrible for coming up with a coherent plot--it forgets a lot of the things it just wrote out and starts to contradict itself--but for the story seed I used, it was perfect, because I've been doing research on that idea to figure out how it might work in a story for years, and never found anything that gave me a decent starting point for it. (It's a music-based magic system, but every previous attempt at designing it I'd tried, it became far too complex for me to write into a story, at least with my abilities as a novelist. ChatGPT gave me the best idea of what something like that could look like without being too complex for me to write, which was a perfect jumping-off point for me to then have the AI take me through a couple possible plot-threads, which I then unraveled and wove into my own plot-string.)

ChatGPT did great at that; I wouldn't trust it to actually write a novel of any quality, at least at this point.

The second stage of my experiment has been to write a few scenes on my own, run them through ChatGPT and ask it for feedback. Results: 95% of its feedback was utter garbage, and what I wrote was leagues better than what it wanted me to edit in (frequently grammar/phrasing things; it's primary goal was conciseness, while my primary goal is to describe what's going on in the story in a very particular way that can only be achieved with certain words). However, the 5% of feedback it gave me that wasn't garbage included little tidbits that did genuinely make pieces of my scenes a little bit better, and it gave me ideas for other ways the scenes could have gone, had I characterized the protagonist of this story differently.

One amusing thing is that, in the second scene I gave it, the protagonist gets highly stressed and overwhelmed and nearly faints. I wrote this scene based on an actual experience of my own a couple of weeks prior, so I knew exactly what the lead-up to that faint had felt like and described the character's experience based on that. The AI took one look at that and basically said, "Nope, I think you should describe the fuzzy black dots at the edge of her vision and stuff, and maybe how all the people feel really far away." To which I replied, "You are a bit of programming that's trying to tell me that the most common description for this is the best description for it--and given my own experience, that is incorrect."

So, I guess at this point, here's my take on AI in general, and what I'd tell any brand-new writers who asked me: Use it to ideate, brainstorm, and kick off your own creativity--but don't let it put too much work in on a project. If I'm reading your book or looking at your art, it's because I want to see YOUR creativity and how YOU view the world. AI is great for ideating your project to give you a place to start, but if it's creating your product for you instead of smoothing out your process, I'm not interested.

ChatGPT did great at that; I wouldn't trust it to actually write a novel of any quality, at least at this point.

The second stage of my experiment has been to write a few scenes on my own, run them through ChatGPT and ask it for feedback. Results: 95% of its feedback was utter garbage, and what I wrote was leagues better than what it wanted me to edit in (frequently grammar/phrasing things; it's primary goal was conciseness, while my primary goal is to describe what's going on in the story in a very particular way that can only be achieved with certain words). However, the 5% of feedback it gave me that wasn't garbage included little tidbits that did genuinely make pieces of my scenes a little bit better, and it gave me ideas for other ways the scenes could have gone, had I characterized the protagonist of this story differently.

One amusing thing is that, in the second scene I gave it, the protagonist gets highly stressed and overwhelmed and nearly faints. I wrote this scene based on an actual experience of my own a couple of weeks prior, so I knew exactly what the lead-up to that faint had felt like and described the character's experience based on that. The AI took one look at that and basically said, "Nope, I think you should describe the fuzzy black dots at the edge of her vision and stuff, and maybe how all the people feel really far away." To which I replied, "You are a bit of programming that's trying to tell me that the most common description for this is the best description for it--and given my own experience, that is incorrect."

So, I guess at this point, here's my take on AI in general, and what I'd tell any brand-new writers who asked me: Use it to ideate, brainstorm, and kick off your own creativity--but don't let it put too much work in on a project. If I'm reading your book or looking at your art, it's because I want to see YOUR creativity and how YOU view the world. AI is great for ideating your project to give you a place to start, but if it's creating your product for you instead of smoothing out your process, I'm not interested.

I wrote this first section over a month ago now, and since then I've been doing more experiments with ChatGPT on my official Work in Process, currently titled The Noble Thief. I recently decided I wanted to get back into working on the revisions of this project and really hammer down what I want the plot to be before I roll into more specific, detailed edits.

Since I already had thirteen chapters of this project when I decided to start this experiment, and had gotten stuck in a section of the book where the protagonist is traveling through a forest with a terrifying reputation, I decided to take a different approach to the section than I had done previously. Where before I'd stumbled through that section of the story without knowing what I really wanted it to look like and how it would impact my protagonist, I instead went to ChatGPT and said, "Give me a sample scene of a character who was once a princess and is now a thief exploring a dangerous, terrifying forest." The AI gave me a scene, then asked me if I wanted it to write another scene off the same premise, but with a different flavor.

After running the AI through about 12 different scenes to fill my head with ideas for the language I could use in describing this forest, I began to get some ideas--but first I wanted to know what ChatGPT thought of the rest of the story, so I copied the story into the text box, one chapter at a time, and asked the AI for feedback. As with the previous experiment, most of the feedback it gave was not particularly helpful--the AI tends to focus on line-edit level feedback when what I really need is an alert about plot holes. However, despite the majority of its feedback being nearly useless to me at this stage (and, frankly, in general, because I don't trust most of its line edits beyond typos), the simple act of the AI spitting back a general analysis of each scene somehow helped the idea-seeds in my head grow, and I threw myself into writing the forest sequence. To continue the experiment and the flow of ideas, I've been stopping after each chapter and running it through ChatGPT, just to see what it says.

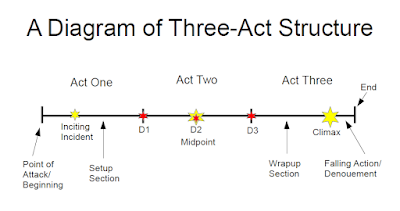

Now, a few days later, I am ten chapters farther into the story than I was before, and still going strong--I've just hit the midpoint crisis where we realize how dire the situation really is, and I'm beginning to get a clearer idea of how the rest of the story will go than I think I've ever had before.

In the comment-section conversation on Patricia Wrede's blog that initially inspired this whole post, another commenter noted that they primarily use ChatGPT for "rubber ducking," a term that comes from programming where the programmer explains what the program they're working on is doing in extremely simple terms to a rubber duck by their computer. In so doing, they clarify their project and frequently figure out where the bug in their code is.

In many ways, what I've been doing is kind of like reverse rubber ducking--by giving ChatGPT sections of my story and having it give me "feedback," despite the feedback's questionable quality most of the time, the result is usually that I get to see what the AI is recognizing is going on in each scene, which tells me if I've been successful in conveying the ideas in my head clearly (something that has historically been an issue for me, as what's in my head doesn't always make it onto the page). I do occasionally take bits and pieces of its suggestions, but most of the time if I go back and do a line edit based on what it said, I find the core principle behind the AI's suggestion and use that to make my own edits to the noted moment.

I think this is the best way I've found so far to use generative AI in the writing process. It's not about finding a quicker, easier, cheaper way to make a product, it's about smoothing my personal process. At this point, I've been through enough drafts and partial drafts that I no longer have patience for trying to muddle my way through the story anymore. If there's a tool that can form a springboard for my own ideas and creativity (such as having the AI write some generic scenes based on a prompt that comes from what I'm going for, in order to get ideas for the language I want for a given scene sequence), or that can help me become aware of what's actually being communicated on the page, I'm going to use it! Because, let's be honest, we authors steal language and ideas from each other all the time anyway. If I can't find books that fit the niche my story does to steal that language and those ideas myself, then having the AI write generic scenes to spark my own inspiration seems like a reasonable thing to do.

What I won't do is ask the AI to write the story for me. It doesn't get to write me scenes, or paragraphs, or full sentences that I cut and paste wholesale into my story. It doesn't get to mess with my storytelling voice that way. Even if the line edits it suggests are technically stronger than what exists in my draft already, I'm not going to use them--I'm going to find real people with real expertise who can help me tighten up my prose, because the one major thing that generative AI lacks is the ability to inject real emotion into prose. A lot of the edits it suggests, the scenes and paragraphs and descriptions it writes, are empty of anything that really makes me feel connected to the character. Why? Because AI doesn't actually understand emotion. It doesn't actually understand the human experience. All it knows how to do is take words and organize them in the most common manner it's seen, or in the way it's been trained to organize them.

And when the day comes that AI does have the ability to write better than a human, I don't want to use it--because as a reader, I'm not here to read what the computer wrote. I'm here to read what a real human wrote, based on their life experiences. Even if the writing is less polished or the story has more holes than the AI, I want what's human, because what's human is what's real. AI should never be a replacement for human imagination, creativity, and inspiration. It should be a tool to support our imaginations, creativity, and inspiration.

But, I will admit, it gets a little morally grey in there. Because how do you define the difference between supporting creativity and replacing it? Someone who struggles with descriptions could say that the AI-generated descriptions they use are supporting their creativity because it lets them play to their strengths in other areas of writing. Someone who struggles with plotting could say that having the AI write their plot summary for them supports their creativity because it gives them more time and freedom to focus on creating vivid descriptions, imagery, and characters.

So I guess when it comes down to it, the right way to use AI really depends on the person involved. Some people say not to use it at all. I got the seeds for my own method from my college English professor, who said it was fine to use AI in his class for brainstorming and feedback, but not for writing the actual paper. Other people are just fine choosing a popular topic and telling AI to write them a book on that topic, then publishing it under their own name to make a bunch of money.

I guess what makes the "right" thing to do with AI so hard to figure out is the fact that every person has a slightly different opinion on it. My advice is to do a little experimenting with it, see what you think. Feel it out for yourself what the right answer is. And ultimately, be creative! Pour your heart and soul into your work, because when I read, or look at art, or watch a movie, the heart and soul of the creator is what I really want to see.

Comments

Post a Comment

Have a thought? Share it! I love hearing what other people have to say.